[OpenVINS] Code Review

ros_subscribe_msckf.cpp

- main() [Done]

- callback_inertial() [Done]

- sys->feed_measurement_imu() [Done]

- propagator->feed_imu() [Done]

- initializer->feed_imu() [Done]

- callback_monocular()

- sys->feed_measurement_monocular() [Done]

- trackFEATS->feed_monocular() [Done]

[ if "use_klt" parameter true, then it use "TrackKLT". else it use "TrackDescriptor".

for our system, we use "TrackKLT". so we look for "TrackKLT". ]- perform_detection_monocular() [Done]

- perform_matching() [Done]

- undistort_point() [Done]

- undistort_point_brown() [Done]

- undistort_point() [Done]

- database->update_feature() [Done]

- try_to_initialize() [Done]

- initializer->initialize_with_imu() [Done]

- [IMU] state->_imu->set_value() [Done]

- [Type::PoseJPL] _pose->set_value() [Done]

- [Type::JPLQuat] _q->set_value() [Done]

- ov_core::quat_2_Rot() [Done]

- [Type::Vec] _p->set_value() [Done]

- [Type::JPLQuat] _q->set_value() [Done]

- [Type::Vec] _v->set_value() [Done]

- [Type::Vec] _bg->set_value() [Done]

- [Type::Vec] _ba->set_value() [Done}

- [Type::PoseJPL] _pose->set_value() [Done]

- [IMU] state->_imu->set_fej() [Done]

- [Type::PoseJPL] _pose->set_fej() [Done]

- [Type::JPLQuat] _q->set_fej() [Done]

- ov_core::quat_2_Rot() [Done]

- [Type::JPLQuat] _q->set_fej() [Done]

- [Type::Vec] _v->set_fej() [Done]

- [Type::Vec] _bg->set_fej() [Done]

- [Type::Vec] _ba->set_fej() [Done]

- [Type::PoseJPL] _pose->set_fej() [Done]

- cleanup_measurements() [Done]

- clean_older_measurements() [Done}

- do_feature_propagate_update()

- [Propagator] propagator->propagate_and_clone() [Done]

- select_imu_readings() [Done]

- Propagator::interpolate_data() [Done]

- predict_and_compute() [half done]

- predict_mean_rk4() [Done]

[ if "use_rk4_integration" parameter true, then it use "predict_mean_rk4". else it use "predict_mean_discrete()". for out system, we use "rk4_integration". so we look for "predict_mean_rk4()". ]

- predict_mean_rk4() [Done]

- StateHelper::EKFPropagation() [half done]

- StateHelper::augment_clone() [half done]

- StateHelper::clone() [Done]

- check_if_same_variable() [Done]

- type_check->clone() [Done]

- new_clone->set_local_id() [Done]

- StateHelper::clone() [Done]

- select_imu_readings() [Done]

- trackFEATS->get_feature_database()->features_not_containing_newer() [Done]

- trackFEATS->get_feature_database()->features_containing() [Done]

- trackFEATS->get_feature_database()->get_feature() [Done]

- StateHelper::marginalize_slam() [Done]

- StateHelper::marginalize() [Done]

- [UdaterMSCKF]

- clean_old_measurements() [Done]

- initializer_feat->single_triangulation() [half done]

- initializer_feat->single_gaussnewton() [halfdone]

- FeatureInitializer::compute_error() [half done]

- LandmarkRepresentation::is_relative_representation()

- UpdateHelper::get_feature_jacobian_full()

- UpdateHelper::get_feature_jacobian_representation()

- UpdateHelper::get_feature_jacobian_intrinsics()

- updaterSLAM->update()

- [Propagator] propagator->propagate_and_clone() [Done]

- trackFEATS->feed_monocular() [Done]

- sys->feed_measurement_monocular() [Done]

updaterMSCKF->update()

main()

ros 설정 및 subscriber가 구동되게 된다.

IMU 센서와 관련된 callback_inertial 함수와

Camera 센서와 관련된 callback_monocular 함수가 작동된다.

그 외에 알고리즘에 크게 관여하는 부분은 없으니, 넘어가도록 하자.

// Main function

int main(int argc, char** argv) {

// Launch our ros node

ros::init(argc, argv, "run_subscribe_msckf");

ros::NodeHandle nh("~");

// Create our VIO system

VioManagerOptions params = parse_ros_nodehandler(nh);

sys = new VioManager(params);

viz = new RosVisualizer(nh, sys);

//===================================================================================

//===================================================================================

//===================================================================================

// Our camera topics (left and right stereo)

std::string topic_imu;

std::string topic_camera0, topic_camera1;

nh.param<std::string>("topic_imu", topic_imu, "/imu0");

nh.param<std::string>("topic_camera0", topic_camera0, "/cam0/image_raw");

nh.param<std::string>("topic_camera1", topic_camera1, "/cam1/image_raw");

// Logic for sync stereo subscriber

// https://answers.ros.org/question/96346/subscribe-to-two-image_raws-with-one-function/?answer=96491#post-id-96491

message_filters::Subscriber<sensor_msgs::Image> image_sub0(nh,topic_camera0.c_str(),1);

message_filters::Subscriber<sensor_msgs::Image> image_sub1(nh,topic_camera1.c_str(),1);

//message_filters::TimeSynchronizer<sensor_msgs::Image,sensor_msgs::Image> sync(image_sub0,image_sub1,5);

typedef message_filters::sync_policies::ApproximateTime<sensor_msgs::Image, sensor_msgs::Image> sync_pol;

message_filters::Synchronizer<sync_pol> sync(sync_pol(5), image_sub0,image_sub1);

// Create subscribers

ros::Subscriber subimu = nh.subscribe(topic_imu.c_str(), 9999, callback_inertial);

ros::Subscriber subcam;

if(params.state_options.num_cameras == 1) {

ROS_INFO("subscribing to: %s", topic_camera0.c_str());

subcam = nh.subscribe(topic_camera0.c_str(), 1, callback_monocular);

} else if(params.state_options.num_cameras == 2) {

ROS_INFO("subscribing to: %s", topic_camera0.c_str());

ROS_INFO("subscribing to: %s", topic_camera1.c_str());

sync.registerCallback(boost::bind(&callback_stereo, _1, _2));

} else {

ROS_ERROR("INVALID MAX CAMERAS SELECTED!!!");

std::exit(EXIT_FAILURE);

}

//===================================================================================

//===================================================================================

//===================================================================================

// Spin off to ROS

ROS_INFO("done...spinning to ros");

ros::spin();

// Final visualization

viz->visualize_final();

// Finally delete our system

delete sys;

delete viz;

// Done!

return EXIT_SUCCESS;

}

callback_inertial()

message가 수신된 시간과 함께 회전 가속도 값과 이동 가속도 값을 feed_measurement_imu 함수에 파라미터로 전달하는 역할을 한다.

visualize 부분은 일단 넘긴다.

void callback_inertial(const sensor_msgs::Imu::ConstPtr& msg) {

// convert into correct format

double timem = msg->header.stamp.toSec();

Eigen::Vector3d wm, am;

wm << msg->angular_velocity.x, msg->angular_velocity.y, msg->angular_velocity.z;

am << msg->linear_acceleration.x, msg->linear_acceleration.y, msg->linear_acceleration.z;

// send it to our VIO system

sys->feed_measurement_imu(timem, wm, am);

viz->visualize_odometry(timem);

}

sys->feed_measurement_imu()

propagator에 전달받은 imu 값을 전달받은 시간과 함께 넘겨주게 된다.

아래는 initialize를 위해 값을 넘겨주는 과정이다.

void VioManager::feed_measurement_imu(double timestamp, Eigen::Vector3d wm, Eigen::Vector3d am) {

// Push back to our propagator

propagator->feed_imu(timestamp,wm,am);

// Push back to our initializer

if(!is_initialized_vio) {

initializer->feed_imu(timestamp, wm, am);

}

}

propagator->feed_imu()

IMUDATA 구조체를 갖는 imudata vector에 전달된 값들을 저장하게 된다.

이 때, 20 seconds 전의 imudata 들은 빠른 연산과 메모리 관리를 위해 지워주게 된다.

여기서 더 적절한 메모리 관리 방법에 대해 연구할 가치가 있다.

/**

* @brief Stores incoming inertial readings

* @param timestamp Timestamp of imu reading

* @param wm Gyro angular velocity reading

* @param am Accelerometer linear acceleration reading

*/

void feed_imu(double timestamp, Eigen::Vector3d wm, Eigen::Vector3d am) {

// Create our imu data object

IMUDATA data;

data.timestamp = timestamp;

data.wm = wm;

data.am = am;

// Append it to our vector

imu_data.emplace_back(data);

// Sort our imu data (handles any out of order measurements)

//std::sort(imu_data.begin(), imu_data.end(), [](const IMUDATA i, const IMUDATA j) {

// return i.timestamp < j.timestamp;

//});

// Loop through and delete imu messages that are older then 20 seconds

// TODO: we should probably have more elegant logic then this

// TODO: but this prevents unbounded memory growth and slow prop with high freq imu

auto it0 = imu_data.begin();

while(it0 != imu_data.end()) {

if(timestamp-(*it0).timestamp > 20) {

it0 = imu_data.erase(it0);

} else {

it0++;

}

}

}

initializer->feed_imu()

Initializer에는 propagator와는 다른 imudata가 존재하며, 여기에는 initialize를 위해 3개의 imudata만을 갖는다.

void InertialInitializer::feed_imu(double timestamp, Eigen::Matrix<double,3,1> wm, Eigen::Matrix<double,3,1> am) {

// Create our imu data object

IMUDATA data;

data.timestamp = timestamp;

data.wm = wm;

data.am = am;

// Append it to our vector

imu_data.emplace_back(data);

// Delete all measurements older than three of our initialization windows

auto it0 = imu_data.begin();

while(it0 != imu_data.end() && it0->timestamp < timestamp-3*_window_length) {

it0 = imu_data.erase(it0);

}

}

callback_monocular()

image message를 받아 초기작업을 하는 callback 함수이다.

이루어지는 작업은 다음과 같다.

1. image message의 신뢰성 검사 및 흑백이미지로의 변환(MONO8)

2. 만약 buffer가 채워져있지 않으면 buffer 채우기.

3. 받은 image message의 header 정보와 image 정보를 VIO system에 feed 하기.

4. visualization 하기.

5. 기본적으로 "이 전"에 받은 image message 정보를 feed하게 되고, 현재 받은 image message 정보는 "이 전" 정보를 feed 한 후에 buffer에 넣게 된다.

void callback_monocular(const sensor_msgs::ImageConstPtr& msg0) {

// Get the image

cv_bridge::CvImageConstPtr cv_ptr;

try {

cv_ptr = cv_bridge::toCvShare(msg0, sensor_msgs::image_encodings::MONO8);

} catch (cv_bridge::Exception &e) {

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

// Fill our buffer if we have not

if(img0_buffer.rows == 0) {

time_buffer = cv_ptr->header.stamp.toSec();

img0_buffer = cv_ptr->image.clone();

return;

}

// send it to our VIO system

sys->feed_measurement_monocular(time_buffer, img0_buffer, 0);

viz->visualize();

// move buffer forward

time_buffer = cv_ptr->header.stamp.toSec();

img0_buffer = cv_ptr->image.clone();

}

sys->feed_measurement_monocular()

image 정보를 이용한 전체적인 작업이 진행된다.

내용은 다음과 같다.

1. 프로세싱 타임 체크 (rT1 & rT2)

2. Downsample, 만약 Downsampling을 하고자 한다면 이 부분에서 진행되게 된다.

3. TrackKLT 혹은 TrackDescriptor 클래스에 image message 정보를 feed 해준다.

4. VIO initialization이 이루어지지 않았다면 VIO initialization을 진행하게 된다.

5. propagate와 update를 진행한다.

void VioManager::feed_measurement_monocular(double timestamp, cv::Mat& img0, size_t cam_id) {

// Start timing

rT1 = boost::posix_time::microsec_clock::local_time();

// Downsample if we are downsampling

if(params.downsample_cameras) {

cv::Mat img0_temp;

cv::pyrDown(img0,img0_temp,cv::Size(img0.cols/2.0,img0.rows/2.0));

img0 = img0_temp.clone();

}

// Feed our trackers

trackFEATS->feed_monocular(timestamp, img0, cam_id);

// If aruoc is avalible, the also pass to it

if(trackARUCO != nullptr) {

trackARUCO->feed_monocular(timestamp, img0, cam_id);

}

rT2 = boost::posix_time::microsec_clock::local_time();

// If we do not have VIO initialization, then try to initialize

// TODO: Or if we are trying to reset the system, then do that here!

if(!is_initialized_vio) {

is_initialized_vio = try_to_initialize();

if(!is_initialized_vio) return;

}

// Call on our propagate and update function

do_feature_propagate_update(timestamp);

}

trackFEATS->feed_monocular()

void TrackKLT::feed_monocular(double timestamp, cv::Mat &img, size_t cam_id) {

// Start timing

rT1 = boost::posix_time::microsec_clock::local_time();

// Lock this data feed for this camera

std::unique_lock<std::mutex> lck(mtx_feeds.at(cam_id));

// Histogram equalize

cv::equalizeHist(img, img);

// Extract the new image pyramid

std::vector<cv::Mat> imgpyr;

cv::buildOpticalFlowPyramid(img, imgpyr, win_size, pyr_levels);

rT2 = boost::posix_time::microsec_clock::local_time();

// If we didn't have any successful tracks last time, just extract this time

// This also handles, the tracking initalization on the first call to this extractor

if(pts_last[cam_id].empty()) {

// Detect new features

perform_detection_monocular(imgpyr, pts_last[cam_id], ids_last[cam_id]);

// Save the current image and pyramid

img_last[cam_id] = img.clone();

img_pyramid_last[cam_id] = imgpyr;

return;

}

// First we should make that the last images have enough features so we can do KLT

// This will "top-off" our number of tracks so always have a constant number

perform_detection_monocular(img_pyramid_last[cam_id], pts_last[cam_id], ids_last[cam_id]);

rT3 = boost::posix_time::microsec_clock::local_time();

//===================================================================================

//===================================================================================

// Debug

//printf("current points = %d,%d\n",(int)pts_left_last.size(),(int)pts_right_last.size());

// Our return success masks, and predicted new features

std::vector<uchar> mask_ll;

std::vector<cv::KeyPoint> pts_left_new = pts_last[cam_id];

// Lets track temporally

perform_matching(img_pyramid_last[cam_id],imgpyr,pts_last[cam_id],pts_left_new,cam_id,cam_id,mask_ll);

rT4 = boost::posix_time::microsec_clock::local_time();

//===================================================================================

//===================================================================================

// If any of our mask is empty, that means we didn't have enough to do ransac, so just return

if(mask_ll.empty()) {

img_last[cam_id] = img.clone();

img_pyramid_last[cam_id] = imgpyr;

pts_last[cam_id].clear();

ids_last[cam_id].clear();

printf(RED "[KLT-EXTRACTOR]: Failed to get enough points to do RANSAC, resetting.....\n" RESET);

return;

}

// Get our "good tracks"

std::vector<cv::KeyPoint> good_left;

std::vector<size_t> good_ids_left;

// Loop through all left points

for(size_t i=0; i<pts_left_new.size(); i++) {

// Ensure we do not have any bad KLT tracks (i.e., points are negative)

if(pts_left_new[i].pt.x < 0 || pts_left_new[i].pt.y < 0)

continue;

// If it is a good track, and also tracked from left to right

if(mask_ll[i]) {

good_left.push_back(pts_left_new[i]);

good_ids_left.push_back(ids_last[cam_id][i]);

}

}

//===================================================================================

//===================================================================================

// Update our feature database, with theses new observations

for(size_t i=0; i<good_left.size(); i++) {

cv::Point2f npt_l = undistort_point(good_left.at(i).pt, cam_id);

database->update_feature(good_ids_left.at(i), timestamp, cam_id,

good_left.at(i).pt.x, good_left.at(i).pt.y,

npt_l.x, npt_l.y);

}

// Move forward in time

img_last[cam_id] = img.clone();

img_pyramid_last[cam_id] = imgpyr;

pts_last[cam_id] = good_left;

ids_last[cam_id] = good_ids_left;

rT5 = boost::posix_time::microsec_clock::local_time();

// Timing information

//printf("[TIME-KLT]: %.4f seconds for pyramid\n",(rT2-rT1).total_microseconds() * 1e-6);

//printf("[TIME-KLT]: %.4f seconds for detection\n",(rT3-rT2).total_microseconds() * 1e-6);

//printf("[TIME-KLT]: %.4f seconds for temporal klt\n",(rT4-rT3).total_microseconds() * 1e-6);

//printf("[TIME-KLT]: %.4f seconds for feature DB update (%d features)\n",(rT5-rT4).total_microseconds() * 1e-6, (int)good_left.size());

//printf("[TIME-KLT]: %.4f seconds for total\n",(rT5-rT1).total_microseconds() * 1e-6);

}| // Start timing |

| rT1 = boost::posix_time::microsec_clock::local_time(); |

프로세스 타임 측정

| // Lock this data feed for this camera |

| std::unique_lock<std::mutex> lck(mtx_feeds.at(cam_id)); |

변수가 여기서만 값이 변경되도록 mutex를 이용해 lock 해줌.

| // Histogram equalize |

| cv::equalizeHist(img, img); |

histogram equalization을 수행해줌.

| // Extract the new image pyramid |

| std::vector<cv::Mat> imgpyr; |

| cv::buildOpticalFlowPyramid(img, imgpyr, win_size, pyr_levels); |

| rT2 = boost::posix_time::microsec_clock::local_time(); |

이미지로부터 새로운 이미지 피라미드를 생성함.

| // If we didn't have any successful tracks last time, just extract this time |

| // This also handles, the tracking initalization on the first call to this extractor |

| if(pts_last[cam_id].empty()) { |

| // Detect new features |

| perform_detection_monocular(imgpyr, pts_last[cam_id], ids_last[cam_id]); |

| // Save the current image and pyramid |

| img_last[cam_id] = img.clone(); |

| img_pyramid_last[cam_id] = imgpyr; |

| return; |

| } |

만일 이 전 이미지 피라미드에서 특징점이 검출되지 않은 경우,

해당 부분에서 perform_detection_monocular() 함수를 사용하여 특징점 검출을 수행한 후,

현재 이미지와 이미지 피라미드를 이 전 이미지와 이미지 피라미드로 만들어준 후 return 함.

| // First we should make that the last images have enough features so we can do KLT |

| // This will "top-off" our number of tracks so always have a constant number |

| perform_detection_monocular(img_pyramid_last[cam_id], pts_last[cam_id], ids_last[cam_id]); |

혹시나 특징점이 파라미터에서 설정한 개수만큼 충분히 나오지 않았을 경우를 대비하여 다시 한번 perform_detection_monocular() 함수를 실행해줌.

| rT3 = boost::posix_time::microsec_clock::local_time(); |

프로세스 시간 특정 종료

| // Debug |

| //printf("current points = %d,%d\n",(int)pts_left_last.size(),(int)pts_right_last.size()); |

검출된 특징점 개수를 디버깅 한다.

여기서는 사용하지 않는다.

left와 right로 구분되어 있는 것을 봐서는 스테레오 카메라 용인 것 같다.

| // Our return success masks, and predicted new features |

| std::vector<uchar> mask_ll; |

| std::vector<cv::KeyPoint> pts_left_new = pts_last[cam_id]; |

mask_ll 에서는 이후 perform_matching() 함수를 통해 tracking 된 결과에 대한 정보가 담기게 되며,

pts_left_new 에는 perform_matching() 함수를 통해 이 전의 특징점들로부터 tracking된 새로운 이미지 피라미드의 특징점들이 담기게 된다.

| // Lets track temporally |

| perform_matching(img_pyramid_last[cam_id],imgpyr,pts_last[cam_id],pts_left_new,cam_id,cam_id,mask_ll); |

perform_matching 함수는 이 전의 이미지 피라미드와 이 전의 피라미드 값과 새로운 이미지 피라미드를 이용하여 tracking된 새로운 특징점을 pts_left_new 에 담게된다. 그리고 ransac 알고리즘을 통해 매칭된 결과를 mask_ll에 담는다.

| rT4 = boost::posix_time::microsec_clock::local_time(); |

프로세스 종료 시간 측정

| // If any of our mask is empty, that means we didn't have enough to do ransac, so just return |

| if(mask_ll.empty()) { |

| img_last[cam_id] = img.clone(); |

| img_pyramid_last[cam_id] = imgpyr; |

| pts_last[cam_id].clear(); |

| ids_last[cam_id].clear(); |

| printf(RED "[KLT-EXTRACTOR]: Failed to get enough points to do RANSAC, resetting.....\n" RESET); |

| return; |

| } |

만약 ransac 알고리즘을 통하여 얻은 매칭된 특징점이 존재하지 않는다면,

현재 이미지와 이미지 피라미드를 이 전 이미지, 이미지 피라미드로 만들어주고,

이 전 특징점 정보와 id 정보를 clear 하게 된다.

그리고 return 해준다.

| // Get our "good tracks" |

| std::vector<cv::KeyPoint> good_left; |

| std::vector<size_t> good_ids_left; |

tracking 까지 진행된 후 얻어진 양호한 결과들을 담을 벡터를 만든다.

| // Loop through all left points |

| for(size_t i=0; i<pts_left_new.size(); i++) { |

| // Ensure we do not have any bad KLT tracks (i.e., points are negative) |

| if(pts_left_new[i].pt.x < 0 || pts_left_new[i].pt.y < 0) |

| continue; |

| // If it is a good track, and also tracked from left to right |

| if(mask_ll[i]) { |

| good_left.push_back(pts_left_new[i]); |

| good_ids_left.push_back(ids_last[cam_id][i]); |

| } |

| } |

제대로 tracking 된 결과들을 good_left 벡터와 good_ids_left 벡터에 담는다.

perform_detection_monocular()

해당 함수의 목적은 이미지 피라미드를 이용해 파라미터에서 설정한 개수만큼 특징점을 구해주는 것이다.

그리고 검출된 특징점들을 파라미터인 pts0에 담아준다.

이 부분은 수식도 들어가는 복잡한 부분이기 때문에 line by line review를 해보자.

void TrackKLT::perform_detection_monocular(const std::vector<cv::Mat> &img0pyr, std::vector<cv::KeyPoint> &pts0, std::vector<size_t> &ids0) {

// Create a 2D occupancy grid for this current image

// Note that we scale this down, so that each grid point is equal to a set of pixels

// This means that we will reject points that less then grid_px_size points away then existing features

// TODO: figure out why I need to add the windowsize of the klt to handle features that are outside the image bound

// TODO: I assume this is because klt of features at the corners is not really well defined, thus if it doesn't get a match it will be out-of-bounds

Eigen::MatrixXi grid_2d = Eigen::MatrixXi::Zero((int)(img0pyr.at(0).rows/min_px_dist)+15, (int)(img0pyr.at(0).cols/min_px_dist)+15);

auto it0 = pts0.begin();

auto it2 = ids0.begin();

while(it0 != pts0.end()) {

// Get current left keypoint

cv::KeyPoint kpt = *it0;

// Check if this keypoint is near another point

if(grid_2d((int)(kpt.pt.y/min_px_dist),(int)(kpt.pt.x/min_px_dist)) == 1) {

it0 = pts0.erase(it0);

it2 = ids0.erase(it2);

continue;

}

// Else we are good, move forward to the next point

grid_2d((int)(kpt.pt.y/min_px_dist),(int)(kpt.pt.x/min_px_dist)) = 1;

it0++;

it2++;

}

// First compute how many more features we need to extract from this image

int num_featsneeded = num_features - (int)pts0.size();

// If we don't need any features, just return

if(num_featsneeded < 1)

return;

// Extract our features (use fast with griding)

std::vector<cv::KeyPoint> pts0_ext;

Grider_FAST::perform_griding(img0pyr.at(0), pts0_ext, num_featsneeded, grid_x, grid_y, threshold, true);

// Now, reject features that are close a current feature

std::vector<cv::KeyPoint> kpts0_new;

std::vector<cv::Point2f> pts0_new;

for(auto& kpt : pts0_ext) {

// See if there is a point at this location

if(grid_2d((int)(kpt.pt.y/min_px_dist),(int)(kpt.pt.x/min_px_dist)) == 1)

continue;

// Else lets add it!

kpts0_new.push_back(kpt);

pts0_new.push_back(kpt.pt);

grid_2d((int)(kpt.pt.y/min_px_dist),(int)(kpt.pt.x/min_px_dist)) = 1;

}

// Loop through and record only ones that are valid

for(size_t i=0; i<pts0_new.size(); i++) {

// update the uv coordinates

kpts0_new.at(i).pt = pts0_new.at(i);

// append the new uv coordinate

pts0.push_back(kpts0_new.at(i));

// move id foward and append this new point

size_t temp = ++currid;

ids0.push_back(temp);

}

}| Eigen::MatrixXi grid_2d = Eigen::MatrixXi::Zero((int)(img0pyr.at(0).rows/min_px_dist)+15, (int)(img0pyr.at(0).cols/min_px_dist)+15); |

Eigen::MatrixXi 는 Matrix<int,Dynamic,Dynamic>의 typedef 이다.

즉 요소의 type은 int이며, 파라미터로 주어지는 raws와 cols의 값만큼 Matrix가 만들어지는 것이다.

또한 Eigen::MatrixXi::Zero 이기 때문에 모두 0값으로 채워질 것이다.

| auto it0 = pts0.begin(); |

| auto it2 = ids0.begin(); |

pts0와 ids0의 원 형태는 다음과 같다.

std::vector<cv::KeyPoint> pts0;

std::vector<cv::KeyPoint> ids0;

vector의 begin()은 vector의 beginning iterator를 반환한다.

| while(it0 != pts0.end()) { |

| // Get current left keypoint |

| cv::KeyPoint kpt = *it0; |

| // Check if this keypoint is near another point |

| if(grid_2d((int)(kpt.pt.y/min_px_dist),(int)(kpt.pt.x/min_px_dist)) == 1) { |

| it0 = pts0.erase(it0); |

| it2 = ids0.erase(it2); |

| continue; |

| } |

| // Else we are good, move forward to the next point |

| grid_2d((int)(kpt.pt.y/min_px_dist),(int)(kpt.pt.x/min_px_dist)) = 1; |

| it0++; |

| it2++; |

| } |

반복문이 돌면서 it0와 it2는 1씩 증가하게 될 것이다.

그리고 pts0 vector의 마지막 요소와 it0의 포인터가 같아진다면 반복문은 종료되게 된다.

keypoints의 points는 계속 변화하지만, min_px_dist로 나누는 범위 안에서 이미 1이 된 값이 존재한다면 그 이후에 feature points는 같은 그리드 안에서 중복될 경우 그냥 없애주게 된다.

그러므로 이 과정은 Current image의 2D occupancy grid에서 keypoints 부분들을 점유시켜주는 것이라 볼 수 있다.

| // First compute how many more features we need to extract from this image |

| int num_featsneeded = num_features - (int)pts0.size(); |

초기에 parameter 설정으로 우리가 필요로 하는 feature의 개수를 정했다.

해당 개수에서 현재 feature의 개수를 뺌으로써 우리가 필요로 하는 feature의 개수를 정하게 된다.

| // If we don't need any features, just return |

| if(num_featsneeded < 1) |

| return; |

만약 필요한 feature의 개수가 충족된다면 그냥 return한다.

| // Extract our features (use fast with griding) |

| std::vector<cv::KeyPoint> pts0_ext; |

| Grider_FAST::perform_griding(img0pyr.at(0), pts0_ext, num_featsneeded, grid_x, grid_y, threshold, true); |

여기서는 부족한 keypoints를 검출하기 위한 작업이 진행되는데,

특히 ov_core::Grider_FAST 클래스를 사용한다는 점이 중요하다.

Grider_FAST::perform_griding 은 grid 형태에 대해서 FAST 알고리즘을 수행하는 것이라고 보면 된다.

| // Now, reject features that are close a current feature |

| std::vector<cv::KeyPoint> kpts0_new; |

| std::vector<cv::Point2f> pts0_new; |

| for(auto& kpt : pts0_ext) { |

| // See if there is a point at this location |

| if(grid_2d((int)(kpt.pt.y/min_px_dist),(int)(kpt.pt.x/min_px_dist)) == 1) |

| continue; |

| // Else lets add it! |

| kpts0_new.push_back(kpt); |

| pts0_new.push_back(kpt.pt); |

| grid_2d((int)(kpt.pt.y/min_px_dist),(int)(kpt.pt.x/min_px_dist)) = 1; |

| } |

이제 새로 얻어진 feature points 들에 대해 같은 그리드 안에서 중복되는 keypoints는 제거해준다.

| // Loop through and record only ones that are valid |

| for(size_t i=0; i<pts0_new.size(); i++) { |

| // update the uv coordinates |

| kpts0_new.at(i).pt = pts0_new.at(i); |

| // append the new uv coordinate |

| pts0.push_back(kpts0_new.at(i)); |

| // move id foward and append this new point |

| size_t temp = ++currid; |

| ids0.push_back(temp); |

| } |

이제 새로 구한 keypoints 들을 기존에 구했던 keypoints들에 append 해준다.

perform_matching()

ov_core::TrackKLT class | OpenVINS

Search for symbols, directories, files, pages or modules. You can omit any prefix from the symbol or file path; adding a : or / suffix lists all members of given symbol or directory. Use ↓ / ↑ to navigate through the list, Enter to go. Tab autocomplete

docs.openvins.com

perform_matching에서는 이전 image pyramid인 img0pyr와 이 전의 특징점인 kpts0, 그리고 현재 image pyramid인 img1pyr을 이용해서 tracking(optical flow)을 수행한다. 이 때, 인자로 받는 kpts0와 kpts1은 같다. mask_out는 track 된 특징점이 존재하는 픽셀이 1이 된다.

void TrackKLT::perform_matching(const std::vector<cv::Mat>& img0pyr, const std::vector<cv::Mat>& img1pyr,

std::vector<cv::KeyPoint>& kpts0, std::vector<cv::KeyPoint>& kpts1,

size_t id0, size_t id1,

std::vector<uchar>& mask_out) {

// We must have equal vectors

assert(kpts0.size() == kpts1.size());

// Return if we don't have any points

if(kpts0.empty() || kpts1.empty())

return;

// Convert keypoints into points (stupid opencv stuff)

std::vector<cv::Point2f> pts0, pts1;

for(size_t i=0; i<kpts0.size(); i++) {

pts0.push_back(kpts0.at(i).pt);

pts1.push_back(kpts1.at(i).pt);

}

// If we don't have enough points for ransac just return empty

// We set the mask to be all zeros since all points failed RANSAC

if(pts0.size() < 10) {

for(size_t i=0; i<pts0.size(); i++)

mask_out.push_back((uchar)0);

return;

}

// Now do KLT tracking to get the valid new points

std::vector<uchar> mask_klt;

std::vector<float> error;

cv::TermCriteria term_crit = cv::TermCriteria(cv::TermCriteria::COUNT + cv::TermCriteria::EPS, 15, 0.01);

cv::calcOpticalFlowPyrLK(img0pyr, img1pyr, pts0, pts1, mask_klt, error, win_size, pyr_levels, term_crit, cv::OPTFLOW_USE_INITIAL_FLOW);

// Normalize these points, so we can then do ransac

// We don't want to do ransac on distorted image uvs since the mapping is nonlinear

std::vector<cv::Point2f> pts0_n, pts1_n;

for(size_t i=0; i<pts0.size(); i++) {

pts0_n.push_back(undistort_point(pts0.at(i),id0));

pts1_n.push_back(undistort_point(pts1.at(i),id1));

}

// Do RANSAC outlier rejection (note since we normalized the max pixel error is now in the normalized cords)

std::vector<uchar> mask_rsc;

double max_focallength_img0 = std::max(camera_k_OPENCV.at(id0)(0,0),camera_k_OPENCV.at(id0)(1,1));

double max_focallength_img1 = std::max(camera_k_OPENCV.at(id1)(0,0),camera_k_OPENCV.at(id1)(1,1));

double max_focallength = std::max(max_focallength_img0,max_focallength_img1);

cv::findFundamentalMat(pts0_n, pts1_n, cv::FM_RANSAC, 1/max_focallength, 0.999, mask_rsc);

// Loop through and record only ones that are valid

for(size_t i=0; i<mask_klt.size(); i++) {

auto mask = (uchar)((i < mask_klt.size() && mask_klt[i] && i < mask_rsc.size() && mask_rsc[i])? 1 : 0);

mask_out.push_back(mask);

}

// Copy back the updated positions

for(size_t i=0; i<pts0.size(); i++) {

kpts0.at(i).pt = pts0.at(i);

kpts1.at(i).pt = pts1.at(i);

}

}| // We must have equal vectors |

| assert(kpts0.size() == kpts1.size()); |

먼저 kpts0와 kpts1이 갖고 있는 특징점 개수가 같은지 확인한다.

함수가 실행되기전 kpts1은 kpts0를 복사한 값이므로 같아야 정상이다.

assert는 예외상황에 대한 에러를 출력하는 함수이다.

| // Return if we don't have any points |

| if(kpts0.empty() || kpts1.empty()) |

| return; |

특징점이 하나라도 검출되지 않는다면 반환한다.

| // Convert keypoints into points (stupid opencv stuff) |

| std::vector<cv::Point2f> pts0, pts1; |

| for(size_t i=0; i<kpts0.size(); i++) { |

| pts0.push_back(kpts0.at(i).pt); |

| pts1.push_back(kpts1.at(i).pt); |

| } |

차후에 cv::calcOpticalFlowPyrLK() 함수에 적용하기 위해서는 cv::Keypoints를 cv::Point2f 형태로 바꾸어야 한다.

cv::Keypoints를 굳이 cv::Point2f로 변환해서 사용해야 하기 때문에 저자는 "stupid opencv stuff" 이라고 이야기하고 있다...ㅋㅋㅋ;;

| // If we don't have enough points for ransac just return empty |

| // We set the mask to be all zeros since all points failed RANSAC |

| if(pts0.size() < 10) { |

| for(size_t i=0; i<pts0.size(); i++) |

| mask_out.push_back((uchar)0); |

| return; |

| } |

차후에 ransac 알고리즘도 수행하게 될텐데, 이 때, 충분한 특징점이 존재하지 않는다면 ransac 알고리즘을 수행할 수 없으므로 return을 하게 된다.

ransac에 실패하기 때문에 mask_out은 모두 0으로 설정하게 되는데 "굳이 해야하나?" 라는 의문도 있다.

| // Now do KLT tracking to get the valid new points |

| std::vector<uchar> mask_klt; |

| std::vector<float> error; |

| cv::TermCriteria term_crit = cv::TermCriteria(cv::TermCriteria::COUNT + cv::TermCriteria::EPS, 15, 0.01); |

| cv::calcOpticalFlowPyrLK(img0pyr, img1pyr, pts0, pts1, mask_klt, error, win_size, pyr_levels, term_crit, cv::OPTFLOW_USE_INITIAL_FLOW); |

이제 cv::calcOpticalFlowPyrLK() 함수를 사용하여 KLT tracking을 수행한다.

이 때, 입력으로 단일 이미지를 줄 수도 있지만, image pyramid 를 줄 수도 있다.

여기서는 image pyramid를 주게 되며,

pts0는 입력으로 flow 판단의 기준좌표가 되고, pts1은 출력으로써 계산된 새로운 특징점의 위치를 갖는다.

mask_klt : mask_klt는 tracking이 된 경우 1이 set되고, 그렇지 않은 경우 0으로 set된다.

error : error의 경우 모든 tracking된 특징점은 error를 갖게 되는데, 이 error의 값은 flag(ex. OPTFLOW_USE_INITIAL_FLOW)에 따라서 정해진다.

winSize : 알고리즘에서 각각의 pyramid level에 대한 search window의 크기를 의미한다.

pyr_levels : pyramid level을 어느 정도로 설정할지이다. 0인 경우 pyramid를 사용하지 않게 되고, 1인 경우 2개 level의 pyramid를 사용하게 되는 식으로 확장된다.

criteria : iterative search algorithm 을 언제 종료(Termination)할지에 대한 기준(Criteria)을 만들게 된다. 반복횟수인 Count는 15회가 넘어갈 때, search window가 Epsilon이 0.01 미만으로 이동할 때가 종료 기준이 된다.

| // Normalize these points, so we can then do ransac |

| // We don't want to do ransac on distorted image uvs since the mapping is nonlinear |

| std::vector<cv::Point2f> pts0_n, pts1_n; |

| for(size_t i=0; i<pts0.size(); i++) { |

| pts0_n.push_back(undistort_point(pts0.at(i),id0)); |

| pts1_n.push_back(undistort_point(pts1.at(i),id1)); |

| } |

ransac을 진행하기 전에 검출되고 tracking된 특징점들에 대해 왜곡보정을 수행한다.

자세한 왜곡보정 방법은 해당 함수에 대한 리뷰를 확인하자.

| // Do RANSAC outlier rejection (note since we normalized the max pixel error is now in the normalized cords) |

| std::vector<uchar> mask_rsc; |

| double max_focallength_img0 = std::max(camera_k_OPENCV.at(id0)(0,0),camera_k_OPENCV.at(id0)(1,1)); |

| double max_focallength_img1 = std::max(camera_k_OPENCV.at(id1)(0,0),camera_k_OPENCV.at(id1)(1,1)); |

| double max_focallength = std::max(max_focallength_img0,max_focallength_img1); |

| cv::findFundamentalMat(pts0_n, pts1_n, cv::FM_RANSAC, 1/max_focallength, 0.999, mask_rsc); |

camera_k_OPENCV 는 다음과 같은 형을 갖는다.

std::map<size_t, cv::Matx33d> camera_k_OPENCV;

map container는 key와 value가 쌍으로 저장되는 형태이며,

여기서는 cam의 id와 해당 카메라의 cameraMatrix가 쌍으로 저장되게 된다.

cameraMatrix는 다음과 같은 형태를 갖는다.

보다시피 (0,0) 과 (1,1) 이 focallength 가 되는데, 이 focallength 중 가장 큰 값을 선정하게 되는 것이다.

그리고 드디어 fundamental matrix를 구하게 된다.

Fundamental Matrix에 대한 자세한 설명은 이 블로그를 참고하면 좋겠다.

간단히 이야기 하면 A, B 두 영상이 존재할 때, A영상에 존재하는 특징점을 B영상의 에피폴라 라인에 사영시키기 위하여 필요한 행렬이 Fundamental Matrix라고 볼 수 있다.

cv::findFundamentalMat() 함수는 두 영상에 존재하는 각각의 특징점을 입력으로 하여 RANSAC 알고리즘을 사용하여 fundamental matrix를 찾아서 반환해주게 되는데, 여기에서는 fundamental matrix를 반환받지는 않고, outlier를 제거하고 inlier만 구하기 위한 방법으로 사용했다.

pts0_n : 첫 번째 이미지 프레임의 특징점

pts1_n : 두 번째 이미지 프레임의 특징점

cv::FM_RANSAC : 사용할 알고리즘 (RANSAC)

1/max_focallength : RANSAC 알고리즘을 위해 사용되는 파라미터. point와 epipolar line의 최대 거리라고 하는데, 보통 1에서 3의 값을 준다고 한다. 그런데 여기에서는 왜 1을 max_focallength로 나누었는지 모르겠다.

### 해당 의문에 대한 github issue ###

0.999 : 해당 파라미터는 확신을 위한 이상적인 레벨에 대한 내용이다. 즉, inlier에 대한 확률과 관련한 파라미터라고 보면 된다.

mask_rsc : 해당 마스크는 uchar 형의 vector이며, outliers로 판단되는 특징점은 0으로, inlier로 판단되는 특징점은 1로 set 된다.

| // Loop through and record only ones that are valid |

| for(size_t i=0; i<mask_klt.size(); i++) { |

| auto mask = (uchar)((i < mask_klt.size() && mask_klt[i] && i < mask_rsc.size() && mask_rsc[i])? 1 : 0); |

| mask_out.push_back(mask); |

| } |

이제 특징점들 중 outlier가 아닌 것들만 mask_out vector에 저장해준다.

| // Copy back the updated positions |

| for(size_t i=0; i<pts0.size(); i++) { |

| kpts0.at(i).pt = pts0.at(i); |

| kpts1.at(i).pt = pts1.at(i); |

| } |

이제 특징점들을 초반에 받은 파라미터 벡터에 담아준다.

undistort_point()

해당 함수에서는 카메라의 cameraMatrix와 distCoeffs를 불러와 왜곡보정을 위한 함수를 실행해주는데,

우리는 단안카메라를 사용하니, undistort_point_brown() 함수가 사용될 것이다.

여기까지만 알면 되겠다.

/**

* @brief Main function that will undistort/normalize a point.

* @param pt_in uv 2x1 point that we will undistort

* @param cam_id id of which camera this point is in

* @return undistorted 2x1 point

*

* Given a uv point, this will undistort it based on the camera matrices.

* This will call on the model needed, depending on what type of camera it is!

* So if we have fisheye for camera_1 is true, we will undistort with the fisheye model.

* In Kalibr's terms, the non-fisheye is `pinhole-radtan` while the fisheye is the `pinhole-equi` model.

*/

cv::Point2f undistort_point(cv::Point2f pt_in, size_t cam_id) {

// Determine what camera parameters we should use

cv::Matx33d camK = this->camera_k_OPENCV.at(cam_id);

cv::Vec4d camD = this->camera_d_OPENCV.at(cam_id);

// Call on the fisheye if we should!

if (this->camera_fisheye.at(cam_id)) {

return undistort_point_fisheye(pt_in, camK, camD);

}

return undistort_point_brown(pt_in, camK, camD);

}

undistort_point_brown()

해당 함수는 단안카메라에서 이미지의 특징점 포인트에 대해 왜곡을 보정하는 함수이다.

/**

* @brief Undistort function RADTAN/BROWN.

*

* Given a uv point, this will undistort it based on the camera matrices.

* To equate this to Kalibr's models, this is what you would use for `pinhole-radtan`.

*/

cv::Point2f undistort_point_brown(cv::Point2f pt_in, cv::Matx33d &camK, cv::Vec4d &camD) {

// Convert to opencv format

cv::Mat mat(1, 2, CV_32F);

mat.at<float>(0, 0) = pt_in.x;

mat.at<float>(0, 1) = pt_in.y;

mat = mat.reshape(2); // Nx1, 2-channel

// Undistort it!

cv::undistortPoints(mat, mat, camK, camD);

// Construct our return vector

cv::Point2f pt_out;

mat = mat.reshape(1); // Nx2, 1-channel

pt_out.x = mat.at<float>(0, 0);

pt_out.y = mat.at<float>(0, 1);

return pt_out;

}| // Convert to opencv format |

| cv::Mat mat(1, 2, CV_32F); |

| mat.at<float>(0, 0) = pt_in.x; |

| mat.at<float>(0, 1) = pt_in.y; |

| mat = mat.reshape(2); // Nx1, 2-channel |

먼저 포인트를 1행 2열의 매트릭스에 담게된다.

그리고 reshape 함수를 사용하게 되는데,

cv::Mat::reshape 함수는 Matrix의 형태를 변경해준다고 보면 된다.

Mat cv::Mat::reshape( int cn, int rows=0 ) const 형태를 지니며,

cn은 변경될 채널의 수, rows는 변경될 행의 수이다.

두 값 모두 0으로 설정할 시 기존의 채널과 rows를 유지한다.

즉 여기에서는 1행 2열의 Matrix를 rows(1)를 유지하며 채널은 2채널로 변경하는 것이다.

여기서 채널이라고 하면, 다음과 같이 이해할 수 있다.

그레이 영상의 경우 채널은 1이고, 컬러영상의 경우 채널은 3이다.

한 픽셀당 갖는 값이 1인지 3인지에 대한 구분이다.

예를들어 3x3 크기의 이미지가 존재한다면 그레이영상과 컬러영상의 형태는 다음과 같게 된다.

그레이영상(1채널, 픽셀당 1개의 값)

| 255 | 255 | 255 |

| 255 | 255 | 255 |

| 255 | 255 | 255 |

컬러영상(3채널, 픽셀당 3개의 값)

| 255 | 255 | 255 | 255 | 255 | 255 | 255 | 255 | 255 |

| 255 | 255 | 255 | 255 | 255 | 255 | 255 | 255 | 255 |

| 255 | 255 | 255 | 255 | 255 | 255 | 255 | 255 | 255 |

즉, 1행 2열의 Matrix는 아래와 같고

| value_1 (1행 1열) | value_2 (1행 2열) |

mat.reshape(2) 후에는 다음과 같다.

| value_1 (1행 1열) | value_2 (1행 1열) |

| // Undistort it! |

| cv::undistortPoints(mat, mat, camK, camD); |

이후에 cv::undistortPoints 함수를 사용하여 왜곡을 보정하게 된다.

이 때, 입력 포인트가 담긴 Matrix가 mat이 되며, 왜곡보정된 출력 포인트 또한 mat이 된다.

파라미터로 camK와 camD를 주게 되는데,

camK는 cameraMatrix, camD는 distCoeffs가 된다.

camera Matrix는 주점, focal length 로 구성되며 다음과 같은 형태이다.

distCoeffs는 왜곡정보를 담은 것으로 다음과 같은 벡터 형태를 갖는다.

| // Construct our return vector |

| cv::Point2f pt_out; |

| mat = mat.reshape(1); // Nx2, 1-channel |

| pt_out.x = mat.at<float>(0, 0); |

| pt_out.y = mat.at<float>(0, 1); |

| return pt_out; |

즉, mat을 reshape한 이유는 cv::undistortPoints 함수에 사용하기 위함이다.

그러므로 왜곡보정이 된 이후에는 다시 reshape하여 원래 형태(cv::Point2f)로 돌려주게 된다.

database->update_feature()

features_idlookup에 update 한다.

/**

* @brief Update a feature object

* @param id ID of the feature we will update

* @param timestamp time that this measurement occured at

* @param cam_id which camera this measurement was from

* @param u raw u coordinate

* @param v raw v coordinate

* @param u_n undistorted/normalized u coordinate

* @param v_n undistorted/normalized v coordinate

*

* This will update a given feature based on the passed ID it has.

* It will create a new feature, if it is an ID that we have not seen before.

*/

void update_feature(size_t id, double timestamp, size_t cam_id,

float u, float v, float u_n, float v_n) {

// Find this feature using the ID lookup

std::unique_lock<std::mutex> lck(mtx);

if (features_idlookup.find(id) != features_idlookup.end()) {

// Get our feature

Feature *feat = features_idlookup[id];

// Append this new information to it!

feat->uvs[cam_id].emplace_back(Eigen::Vector2f(u, v));

feat->uvs_norm[cam_id].emplace_back(Eigen::Vector2f(u_n, v_n));

feat->timestamps[cam_id].emplace_back(timestamp);

return;

}

// Debug info

//ROS_INFO("featdb - adding new feature %d",(int)id);

// Else we have not found the feature, so lets make it be a new one!

Feature *feat = new Feature();

feat->featid = id;

feat->uvs[cam_id].emplace_back(Eigen::Vector2f(u, v));

feat->uvs_norm[cam_id].emplace_back(Eigen::Vector2f(u_n, v_n));

feat->timestamps[cam_id].emplace_back(timestamp);

// Append this new feature into our database

features_idlookup.insert({id, feat});

}| // Find this feature using the ID lookup |

| std::unique_lock<std::mutex> lck(mtx); |

먼저 mutex를 사용해 lock 해준다.

| if (features_idlookup.find(id) != features_idlookup.end()) { |

| // Get our feature |

| Feature *feat = features_idlookup[id]; |

| // Append this new information to it! |

| feat->uvs[cam_id].emplace_back(Eigen::Vector2f(u, v)); |

| feat->uvs_norm[cam_id].emplace_back(Eigen::Vector2f(u_n, v_n)); |

| feat->timestamps[cam_id].emplace_back(timestamp); |

| return; |

| } |

features_idlookup의 원형은 다음과 같다.

std::unordered_map<size_t, Feature *> features_idlookup;

unordered_map은 hash_map 구조를 갖는 데이터 형이며,

key와 value 값을 갖는 데이터들을 빠르게 검색할 수 있다는 특징을 지닌다.

std::unordered_map::find 함수는 파라미터로 주어지는 key 값을 찾는 iterator이며,

해당 key 값을 찾았을 때는 해당 key 값과 매칭되는 value를 반환하게 되고, 찾지 못했을 경우는

std::unordered_map::end 를 반환하게 된다.

즉, if (features_idlookup.find(id) != features_idlookup.end()) 는

key 값이 존재하는 경우에 작동하는 조건문이라고 보면 된다.

이 후에

Feature *feat 이라는 포인터를 만들어서 features_idlookup[id] 의 주소를 가져와 Feature *feat에 건네주게 된다.

그리고 데이터들을 데이터베이스에 담는다.

| // Else we have not found the feature, so lets make it be a new one! |

| Feature *feat = new Feature(); |

| feat->featid = id; |

| feat->uvs[cam_id].emplace_back(Eigen::Vector2f(u, v)); |

| feat->uvs_norm[cam_id].emplace_back(Eigen::Vector2f(u_n, v_n)); |

| feat->timestamps[cam_id].emplace_back(timestamp); |

| // Append this new feature into our database |

| features_idlookup.insert({id, feat}); |

중복되는 id가 없는 feature는 새로운 features_idlookup 데이터베이스를 만들어 insert 해준다.

try_to_initialize()

bool VioManager::try_to_initialize() {

// Returns from our initializer

double time0;

Eigen::Matrix<double, 4, 1> q_GtoI0;

Eigen::Matrix<double, 3, 1> b_w0, v_I0inG, b_a0, p_I0inG;

// Try to initialize the system

bool success = initializer->initialize_with_imu(time0, q_GtoI0, b_w0, v_I0inG, b_a0, p_I0inG);

// Return if it failed

if (!success) {

return false;

}

// Make big vector (q,p,v,bg,ba), and update our state

// Note: start from zero position, as this is what our covariance is based off of

Eigen::Matrix<double,16,1> imu_val;

imu_val.block(0,0,4,1) = q_GtoI0;

imu_val.block(4,0,3,1) << 0,0,0;

imu_val.block(7,0,3,1) = v_I0inG;

imu_val.block(10,0,3,1) = b_w0;

imu_val.block(13,0,3,1) = b_a0;

//imu_val.block(10,0,3,1) << 0,0,0;

//imu_val.block(13,0,3,1) << 0,0,0;

state->_imu->set_value(imu_val);

state->_imu->set_fej(imu_val);

state->_timestamp = time0;

startup_time = time0;

// Cleanup any features older then the initialization time

trackFEATS->get_feature_database()->cleanup_measurements(state->_timestamp);

if(trackARUCO != nullptr) {

trackARUCO->get_feature_database()->cleanup_measurements(state->_timestamp);

}

// Else we are good to go, print out our stats

printf(GREEN "[INIT]: orientation = %.4f, %.4f, %.4f, %.4f\n" RESET,state->_imu->quat()(0),state->_imu->quat()(1),state->_imu->quat()(2),state->_imu->quat()(3));

printf(GREEN "[INIT]: bias gyro = %.4f, %.4f, %.4f\n" RESET,state->_imu->bias_g()(0),state->_imu->bias_g()(1),state->_imu->bias_g()(2));

printf(GREEN "[INIT]: velocity = %.4f, %.4f, %.4f\n" RESET,state->_imu->vel()(0),state->_imu->vel()(1),state->_imu->vel()(2));

printf(GREEN "[INIT]: bias accel = %.4f, %.4f, %.4f\n" RESET,state->_imu->bias_a()(0),state->_imu->bias_a()(1),state->_imu->bias_a()(2));

printf(GREEN "[INIT]: position = %.4f, %.4f, %.4f\n" RESET,state->_imu->pos()(0),state->_imu->pos()(1),state->_imu->pos()(2));

return true;

}

| // Returns from our initializer |

| double time0; |

| Eigen::Matrix<double, 4, 1> q_GtoI0; |

| Eigen::Matrix<double, 3, 1> b_w0, v_I0inG, b_a0, p_I0inG; |

선언

| // Try to initialize the system |

| bool success = initializer->initialize_with_imu(time0, q_GtoI0, b_w0, v_I0inG, b_a0, p_I0inG); |

initialize_with_imu() 함수를 통해 각 파라미터에 값이 들어감.

| // Return if it failed |

| if (!success) { |

| return false; |

| } |

intialization이 실패할 경우 return 함.

| // Make big vector (q,p,v,bg,ba), and update our state |

| // Note: start from zero position, as this is what our covariance is based off of |

| Eigen::Matrix<double,16,1> imu_val; |

| imu_val.block(0,0,4,1) = q_GtoI0; |

| imu_val.block(4,0,3,1) << 0,0,0; |

| imu_val.block(7,0,3,1) = v_I0inG; |

| imu_val.block(10,0,3,1) = b_w0; |

| imu_val.block(13,0,3,1) = b_a0; |

| //imu_val.block(10,0,3,1) << 0,0,0; |

| //imu_val.block(13,0,3,1) << 0,0,0; |

| state->_imu->set_value(imu_val); |

| state->_imu->set_fej(imu_val); |

| state->_timestamp = time0; |

| startup_time = time0; |

imu_val이라는 큰 Matrix를 만들어 값을 대입하게 된다.

Eigen::block은 다음과 같이 쓰인다.

matrix.block(i,j,p,q);

(p,q) 사이즈의 값들을 (i,j) 에 대입한다.

이 imu_val 을 state->_imu에도 set해준다.

| // Else we are good to go, print out our stats |

| printf(GREEN "[INIT]: orientation = %.4f, %.4f, %.4f, %.4f\n" RESET,state->_imu->quat()(0),state->_imu->quat()(1),state->_imu->quat()(2),state->_imu->quat()(3)); |

| printf(GREEN "[INIT]: bias gyro = %.4f, %.4f, %.4f\n" RESET,state->_imu->bias_g()(0),state->_imu->bias_g()(1),state->_imu->bias_g()(2)); |

| printf(GREEN "[INIT]: velocity = %.4f, %.4f, %.4f\n" RESET,state->_imu->vel()(0),state->_imu->vel()(1),state->_imu->vel()(2)); |

| printf(GREEN "[INIT]: bias accel = %.4f, %.4f, %.4f\n" RESET,state->_imu->bias_a()(0),state->_imu->bias_a()(1),state->_imu->bias_a()(2)); |

| printf(GREEN "[INIT]: position = %.4f, %.4f, %.4f\n" RESET,state->_imu->pos()(0),state->_imu->pos()(1),state->_imu->pos()(2)); |

| return true; |

디버깅

initialize_with_imu()

bool InertialInitializer::initialize_with_imu(double &time0, Eigen::Matrix<double,4,1> &q_GtoI0, Eigen::Matrix<double,3,1> &b_w0,

Eigen::Matrix<double,3,1> &v_I0inG, Eigen::Matrix<double,3,1> &b_a0, Eigen::Matrix<double,3,1> &p_I0inG) {

// Return if we don't have any measurements

if(imu_data.empty()) {

return false;

}

// Newest imu timestamp

double newesttime = imu_data.at(imu_data.size()-1).timestamp;

// First lets collect a window of IMU readings from the newest measurement to the oldest

std::vector<IMUDATA> window_newest, window_secondnew;

for(IMUDATA data : imu_data) {

if(data.timestamp > newesttime-1*_window_length && data.timestamp <= newesttime-0*_window_length) {

window_newest.push_back(data);

}

if(data.timestamp > newesttime-2*_window_length && data.timestamp <= newesttime-1*_window_length) {

window_secondnew.push_back(data);

}

}

// Return if both of these failed

if(window_newest.empty() || window_secondnew.empty()) {

//printf(YELLOW "InertialInitializer::initialize_with_imu(): unable to select window of IMU readings, not enough readings\n" RESET);

return false;

}

// Calculate the sample variance for the newest one

Eigen::Matrix<double,3,1> a_avg = Eigen::Matrix<double,3,1>::Zero();

for(IMUDATA data : window_newest) {

a_avg += data.am;

}

a_avg /= (int)window_newest.size();

double a_var = 0;

for(IMUDATA data : window_newest) {

a_var += (data.am-a_avg).dot(data.am-a_avg);

}

a_var = std::sqrt(a_var/((int)window_newest.size()-1));

// If it is below the threshold just return

if(a_var < _imu_excite_threshold) {

printf(YELLOW "InertialInitializer::initialize_with_imu(): no IMU excitation, below threshold %.4f < %.4f\n" RESET,a_var,_imu_excite_threshold);

return false;

}

// Return if we don't have any measurements

//if(imu_data.size() < 200) {

// return false;

//}

// Sum up our current accelerations and velocities

Eigen::Vector3d linsum = Eigen::Vector3d::Zero();

Eigen::Vector3d angsum = Eigen::Vector3d::Zero();

for(size_t i=0; i<window_secondnew.size(); i++) {

linsum += window_secondnew.at(i).am;

angsum += window_secondnew.at(i).wm;

}

// Calculate the mean of the linear acceleration and angular velocity

Eigen::Vector3d linavg = Eigen::Vector3d::Zero();

Eigen::Vector3d angavg = Eigen::Vector3d::Zero();

linavg = linsum/window_secondnew.size();

angavg = angsum/window_secondnew.size();

// Get z axis, which alines with -g (z_in_G=0,0,1)

Eigen::Vector3d z_axis = linavg/linavg.norm();

// Create an x_axis

Eigen::Vector3d e_1(1,0,0);

// Make x_axis perpendicular to z

Eigen::Vector3d x_axis = e_1-z_axis*z_axis.transpose()*e_1;

x_axis= x_axis/x_axis.norm();

// Get z from the cross product of these two

Eigen::Matrix<double,3,1> y_axis = skew_x(z_axis)*x_axis;

// From these axes get rotation

Eigen::Matrix<double,3,3> Ro;

Ro.block(0,0,3,1) = x_axis;

Ro.block(0,1,3,1) = y_axis;

Ro.block(0,2,3,1) = z_axis;

// Create our state variables

Eigen::Matrix<double,4,1> q_GtoI = rot_2_quat(Ro);

// Set our biases equal to our noise (subtract our gravity from accelerometer bias)

Eigen::Matrix<double,3,1> bg = angavg;

Eigen::Matrix<double,3,1> ba = linavg - quat_2_Rot(q_GtoI)*_gravity;

// Set our state variables

time0 = window_secondnew.at(window_secondnew.size()-1).timestamp;

q_GtoI0 = q_GtoI;

b_w0 = bg;

v_I0inG = Eigen::Matrix<double,3,1>::Zero();

b_a0 = ba;

p_I0inG = Eigen::Matrix<double,3,1>::Zero();

// Done!!!

return true;

}| // Return if we don't have any measurements |

| if(imu_data.empty()) { |

| return false; |

| } |

imu_data 체크

| // Newest imu timestamp |

| double newesttime = imu_data.at(imu_data.size()-1).timestamp; |

가장 최근 imu_data 의 time을 가져온다.

| // First lets collect a window of IMU readings from the newest measurement to the oldest |

| std::vector<IMUDATA> window_newest, window_secondnew; |

| for(IMUDATA data : imu_data) { |

| if(data.timestamp > newesttime-1*_window_length && data.timestamp <= newesttime-0*_window_length) { |

| window_newest.push_back(data); |

| } |

| if(data.timestamp > newesttime-2*_window_length && data.timestamp <= newesttime-1*_window_length) { |

| window_secondnew.push_back(data); |

| } |

| } |

해당 부분에서는 window_newest 와 window_secondnew에 imu data를 담아주게 되는데,

초기에 설정하게 되는 _window_length를 기준으로 더 최근 imu data를 window_newest에,

더 과거의 imu data를 window_secondnew에 담아주게 된다.

| // Return if both of these failed |

| if(window_newest.empty() || window_secondnew.empty()) { |

| //printf(YELLOW "InertialInitializer::initialize_with_imu(): unable to select window of IMU readings, not enough readings\n" RESET); |

| return false; |

| } |

만약 window_newest 또는 window_secondnew에 아무 데이터도 존재하지 않는다면 return해준다.

| // Calculate the sample variance for the newest one |

| Eigen::Matrix<double,3,1> a_avg = Eigen::Matrix<double,3,1>::Zero(); |

| for(IMUDATA data : window_newest) { |

| a_avg += data.am; |

| } |

| a_avg /= (int)window_newest.size(); |

| double a_var = 0; |

| for(IMUDATA data : window_newest) { |

| a_var += (data.am-a_avg).dot(data.am-a_avg); |

| } |

| a_var = std::sqrt(a_var/((int)window_newest.size()-1)); |

가장 최근 가속도에 대한 imu data들을 이용해서 평균을 내어 a_avg에 담아준다.

.dot 연산은 곱하기 연산이다.

그러므로 (data.am-a_avg) * (data.am-a_avg) 를 a_var에 더해준다고 보면 된다.

이후에 a_var을 window_newest로 나누어 평균을 구하고, 루트(sqrt)를 취해주어 분산(a_var)을 구하게 된다.

| // If it is below the threshold just return |

| if(a_var < _imu_excite_threshold) { |

| printf(YELLOW "InertialInitializer::initialize_with_imu(): no IMU excitation, below threshold %.4f < %.4f\n" RESET,a_var,_imu_excite_threshold); |

| return false; |

| } |

초기에 설정하는 imu threshold 보다 구해진 a_var 분산값이 낮다면 return한다.

| // Sum up our current accelerations and velocities |

| Eigen::Vector3d linsum = Eigen::Vector3d::Zero(); |

| Eigen::Vector3d angsum = Eigen::Vector3d::Zero(); |

| for(size_t i=0; i<window_secondnew.size(); i++) { |

| linsum += window_secondnew.at(i).am; |

| angsum += window_secondnew.at(i).wm; |

| } |

이제 가장 최근의 imu data들의 vector인 window_secondnew에서

가속도값들의 합 linsum과 회전 가속도 값들의 합 angsum을 구해준다.

| // Calculate the mean of the linear acceleration and angular velocity |

| Eigen::Vector3d linavg = Eigen::Vector3d::Zero(); |

| Eigen::Vector3d angavg = Eigen::Vector3d::Zero(); |

| linavg = linsum/window_secondnew.size(); |

| angavg = angsum/window_secondnew.size(); |

그리고 가속도의 평균인 linavg와 회전 가속도의 평균인 angavg를 구해준다.

| // Get z axis, which alines with -g (z_in_G=0,0,1) |

| Eigen::Vector3d z_axis = linavg/linavg.norm(); |

linavg는 하나의 vector이다.

이러한 벡터가 존재할 때, Eigen::norm() 연산은 square root of dot production 을 통해 벡터의 길이를 구할 수 있게 해준다.

그리고 해당 벡터를 벡터의 길이로 나누게 되면 벡터를 정규화할 수 있게 되는 것이다.

여기서는 z축 벡터를 x축과 y축이 0인 벡터로 국한하지 않고, 기준이 되는 벡터로써 사용을 하는 것 같다.

| // Create an x_axis |

| Eigen::Vector3d e_1(1,0,0); |

x axis 에 대한 기저벡터를 만든다.

| // Make x_axis perpendicular to z |

| Eigen::Vector3d x_axis = e_1-z_axis*z_axis.transpose()*e_1; |

| x_axis= x_axis/x_axis.norm(); |

transpose() 는 전치행렬을 만드는 함수이다.

x_axis를 z_axis에 수직으로 만드는 과정이라고 한다. 어떻게 진행되는지는 아직 모르겠다.

해당 부분에 대한 issue는 다음과 같다.

mathematical derivation of "initialize_with_imu()".

| // Get z from the cross product of these two |

| Eigen::Matrix<double,3,1> y_axis = skew_x(z_axis)*x_axis; |

ov_core::skew_x 는 주어진 벡터를 이용하여 비대칭 행렬을 만드는 함수이다.

다음과 같은 비대칭 행렬이 나오게 되며,

이를 이용해 x_axis와 외적을 수행할 수 있다.

두 벡터의 외적을 통해서 두 벡터에 공통으로 수직인 벡터를 구할 수 있게 되며,

이 때, z축과 x축에 대해 수직인 벡터는 y축 이 되는 것이다.

| // From these axes get rotation |

| Eigen::Matrix<double,3,3> Ro; |

| Ro.block(0,0,3,1) = x_axis; |

| Ro.block(0,1,3,1) = y_axis; |

| Ro.block(0,2,3,1) = z_axis; |

구해진 값들은 이제 rotation 값이 되게 된다. (am으로부터 rotation을 구하게 되는건가?)

| // Create our state variables |

| Eigen::Matrix<double,4,1> q_GtoI = rot_2_quat(Ro); |

이를 quaternion 으로 바꾸어 준다.

| // Set our biases equal to our noise (subtract our gravity from accelerometer bias) |

| Eigen::Matrix<double,3,1> bg = angavg; |

| Eigen::Matrix<double,3,1> ba = linavg - quat_2_Rot(q_GtoI)*_gravity; |

구해주었던 angavg와 linavg 를 biases 로 set해준다.

| // Set our state variables |

| time0 = window_secondnew.at(window_secondnew.size()-1).timestamp; |

| q_GtoI0 = q_GtoI; |

| b_w0 = bg; |

| v_I0inG = Eigen::Matrix<double,3,1>::Zero(); |

| b_a0 = ba; |

| p_I0inG = Eigen::Matrix<double,3,1>::Zero(); |

구해준 값들을 파라미터들에 대입해준다.

| // Done!!! |

| return true; |

return 한다.

[IMU] state->_imu->set_value()

IMU 센서로부터 얻은 각 값들을 각 멤버변수에 담아준다.

해당 함수는 많은 부분에서 사용되므로 한번만 설명하고 넘어간다.

/**

* @brief Sets the value of the estimate

* @param new_value New value we should set

*/

void set_value(const Eigen::MatrixXd new_value) override {

assert(new_value.rows() == 16);

assert(new_value.cols() == 1);

_pose->set_value(new_value.block(0, 0, 7, 1));

_v->set_value(new_value.block(7, 0, 3, 1));

_bg->set_value(new_value.block(10, 0, 3, 1));

_ba->set_value(new_value.block(13, 0, 3, 1));

_value = new_value;

}[Type::PoseJPL] _pose->set_value()

orientation value와 position valuse를 담는다.

/**

* @brief Sets the value of the estimate

* @param new_value New value we should set

*/

void set_value(const Eigen::MatrixXd new_value) override {

assert(new_value.rows() == 7);

assert(new_value.cols() == 1);

//Set orientation value

_q->set_value(new_value.block(0, 0, 4, 1));

//Set position value

_p->set_value(new_value.block(4, 0, 3, 1));

_value = new_value;

}[Type::JPLQuat] _q->set_value()

quaternion을 _value에 저장하고,

해당 quaternion을 rotation matrix로 변환하여 _R에 저장한다.

해당 함수는 많은 부분에서 사용되므로 한번만 설명하고 넘어간다.

/**

* @brief Sets the value of the estimate and recomputes the internal rotation matrix

* @param new_value New value for the quaternion estimate

*/

void set_value(const Eigen::MatrixXd new_value) override {

assert(new_value.rows() == 4);

assert(new_value.cols() == 1);

_value = new_value;

//compute associated rotation

_R = ov_core::quat_2_Rot(new_value);

}

ov_core::quat_2_Rot()

quaternion을 rotation matrix로 바꿔주는 부분이다.

자세한 내용은 차후에 확인하도록 하자.

20200713 내용 확인

어떠한 벡터 p가 있다고 하자.

이 때, Ground의 p를 local frame의 p로 회전시키는 행렬을 C라고 하자.

quaternion을 사용해서 p를 외적하면 local frame으로 회전시킬 수 있다.

그리고 위 수식을 ground의 p 벡터에 대해 정리하면 다음과 같다.

결국 최종적으로 다음의 회전행렬을 구할 수 있다.

해당내용을 구현한 함수이다.

/**

* @brief Converts JPL quaterion to SO(3) rotation matrix

*

* This is based on equation 62 in [Indirect Kalman Filter for 3D Attitude Estimation](http://mars.cs.umn.edu/tr/reports/Trawny05b.pdf):

* \f{align*}{

* \mathbf{R} = (2q_4^2-1)\mathbf{I}_3-2q_4\lfloor\mathbf{q}\times\rfloor+2\mathbf{q}^\top\mathbf{q}

* @f}

*

* @param[in] q JPL quaternion

* @return 3x3 SO(3) rotation matrix

*/

inline Eigen::Matrix<double, 3, 3> quat_2_Rot(const Eigen::Matrix<double, 4, 1> &q) {

Eigen::Matrix<double, 3, 3> q_x = skew_x(q.block(0, 0, 3, 1));

Eigen::MatrixXd Rot = (2 * std::pow(q(3, 0), 2) - 1) * Eigen::MatrixXd::Identity(3, 3)

- 2 * q(3, 0) * q_x +

2 * q.block(0, 0, 3, 1) * (q.block(0, 0, 3, 1).transpose());

return Rot;

}[Type::Vec] _p->set_value()

아마 이건 Type 의 함수를 override 한 듯 하다.

해당 함수는 많은 부분에서 사용되므로 한번만 설명하고 넘어간다.

/**

* @brief Performs all the cloning

*/

Type *clone() override {

Type *Clone = new Vec(_size);

Clone->set_value(value());

Clone->set_fej(fej());

return Clone;

}

/**

* @brief Overwrite value of state's estimate

* @param new_value New value that will overwrite state's value

*/

virtual void set_value(const Eigen::MatrixXd new_value) {

assert(_value.rows()==new_value.rows());

assert(_value.cols()==new_value.cols());

_value = new_value;

}

[IMU] state->_imu->set_fej()

그냥 값 넣는거다. 위 내용과 크게 안다르다. 단지 각 변수에서 set_fej() 함수를 사용한다.

/**

* @brief Sets the value of the first estimate

* @param new_value New value we should set

*/

void set_fej(const Eigen::MatrixXd new_value) override {

assert(new_value.rows() == 16);

assert(new_value.cols() == 1);

_pose->set_fej(new_value.block(0, 0, 7, 1));

_v->set_fej(new_value.block(7, 0, 3, 1));

_bg->set_fej(new_value.block(10, 0, 3, 1));

_ba->set_fej(new_value.block(13, 0, 3, 1));

_fej = new_value;

}

[Type::PoseJPL] _pose->set_fej()

여기도 위와 크게 안다르다.

/**

* @brief Sets the value of the first estimate

* @param new_value New value we should set

*/

void set_fej(const Eigen::MatrixXd new_value) override {

assert(new_value.rows() == 7);

assert(new_value.cols() == 1);

//Set orientation fej value

_q->set_fej(new_value.block(0, 0, 4, 1));

//Set position fej value

_p->set_fej(new_value.block(4, 0, 3, 1));

_fej = new_value;

}

[Type::JPLQuat] _q->set_fej()

여기도 다른건 없다.

/**

* @brief Sets the fej value and recomputes the fej rotation matrix

* @param new_value New value for the quaternion estimate

*/

void set_fej(const Eigen::MatrixXd new_value) override {

assert(new_value.rows() == 4);

assert(new_value.cols() == 1);

_fej = new_value;

//compute associated rotation

_Rfej = ov_core::quat_2_Rot(new_value);

}

[Type::Vec] _p->set_fej()

여기도 다른게 없다.

/**

* @brief Performs all the cloning

*/

Type *clone() override {

Type *Clone = new Vec(_size);

Clone->set_value(value());

Clone->set_fej(fej());

return Clone;

}

/**

* @brief Overwrite value of first-estimate

* @param new_value New value that will overwrite state's fej

*/

virtual void set_fej(const Eigen::MatrixXd new_value) {

assert(_fej.rows()==new_value.rows());

assert(_fej.cols()==new_value.cols());

_fej = new_value;

}

clean_measurements()

/**

* @brief This function will delete all feature measurements that are older then the specified timestamp

*/

void cleanup_measurements(double timestamp) {

std::unique_lock<std::mutex> lck(mtx);

for (auto it = features_idlookup.begin(); it != features_idlookup.end();) {

// Remove the older measurements

(*it).second->clean_older_measurements(timestamp);

// Count how many measurements

int ct_meas = 0;

for(const auto &pair : (*it).second->timestamps) {

ct_meas += (*it).second->timestamps[pair.first].size();

}

// If delete flag is set, then delete it

if (ct_meas < 1) {

delete (*it).second;

features_idlookup.erase(it++);

} else {

it++;

}

}

}| std::unique_lock<std::mutex> lck(mtx); |

lock 해주기

| for (auto it = features_idlookup.begin(); it != features_idlookup.end();) { |

features_idlookup 에 대한 iterator를 이용한 반복문

| // Remove the older measurements |

| (*it).second->clean_older_measurements(timestamp); |

it 는 features_idlookup 을 의미하고 여기서 second 는 "Feat" 를 의미한다.

Feat의 clean_older_measurements 함수를 수행하는 것이다.

이 때, timestamp를 매개변수로 쓰게 되는데 이는 현재 시간에 대한 정보이다.

| // Count how many measurements |

| int ct_meas = 0; |

| for(const auto &pair : (*it).second->timestamps) { |

| ct_meas += (*it).second->timestamps[pair.first].size(); |

| } |

timestamps의 원형은 다음과 같다.

/// Timestamps of each UV measurement (mapped by camera ID)

std::unordered_map<size_t, std::vector<double>> timestamps;

그러므로 (*it).second->timestamps 를 반복문으로 돌린다는 것은

unordered_map 을 대상으로 돌린다는 것이고,

stereo camera의 경우에는 2번 돌리겠지만,

mono camera의 경우에는 1번만 돌아갈 것이다.

size_t에는 camera ID가 들어가고 std::vector<double> 에는 time 들이 vector 형으로 기록되게 된다.

이러한 timestamps 의 주소를 pair 로 가져오게 되는 것이고,

pair.first 는 camera ID 가 되는 것이다.

이러한 timestamps 안에 몇개의 데이터가 존재하는지를 카메라에 따른 평균을 내는 것이다.

| // If delete flag is set, then delete it |

| if (ct_meas < 1) { |

| delete (*it).second; |

| features_idlookup.erase(it++); |

| } else { |

| it++; |

| } |

만약 feature가 하나도 존재하지 않는다면, 해당 feature를 지워버리고 다음 feature로 넘어간다.

그렇지 않으면 바로 다음 feature로 넘어간다.

clean_older_measurements()

initialize를 하기까지의 모든 measurements 들을 없애준다.

void Feature::clean_older_measurements(double timestamp) {

// Loop through each of the cameras we have

for(auto const &pair : timestamps) {

// Assert that we have all the parts of a measurement

assert(timestamps[pair.first].size() == uvs[pair.first].size());

assert(timestamps[pair.first].size() == uvs_norm[pair.first].size());

// Our iterators

auto it1 = timestamps[pair.first].begin();

auto it2 = uvs[pair.first].begin();

auto it3 = uvs_norm[pair.first].begin();

// Loop through measurement times, remove ones that are older then the specified one

while (it1 != timestamps[pair.first].end()) {

if (*it1 <= timestamp) {

it1 = timestamps[pair.first].erase(it1);

it2 = uvs[pair.first].erase(it2);

it3 = uvs_norm[pair.first].erase(it3);

} else {

++it1;

++it2;

++it3;

}

}

}

}

VioManager::do_feature_propagate_update()

/**

* @brief This will do the propagation and feature updates to the state

* @param timestamp The most recent timestamp we have tracked to

*/

void do_feature_propagate_update(double timestamp);

void VioManager::do_feature_propagate_update(double timestamp) {

//===================================================================================

// State propagation, and clone augmentation

//===================================================================================

// Return if the camera measurement is out of order

if(state->_timestamp >= timestamp) {

printf(YELLOW "image received out of order (prop dt = %3f)\n" RESET,(timestamp-state->_timestamp));

return;

}

// Propagate the state forward to the current update time

// Also augment it with a new clone!

propagator->propagate_and_clone(state, timestamp);

rT3 = boost::posix_time::microsec_clock::local_time();

// If we have not reached max clones, we should just return...

// This isn't super ideal, but it keeps the logic after this easier...

// We can start processing things when we have at least 5 clones since we can start triangulating things...

if((int)state->_clones_IMU.size() < std::min(state->_options.max_clone_size,5)) {

printf("waiting for enough clone states (%d of %d)....\n",(int)state->_clones_IMU.size(),std::min(state->_options.max_clone_size,5));

return;

}

// Return if we where unable to propagate

if(state->_timestamp != timestamp) {

printf(RED "[PROP]: Propagator unable to propagate the state forward in time!\n" RESET);

printf(RED "[PROP]: It has been %.3f since last time we propagated\n" RESET,timestamp-state->_timestamp);

return;

}

//===================================================================================

// MSCKF features and KLT tracks that are SLAM features

//===================================================================================

// Now, lets get all features that should be used for an update that are lost in the newest frame

std::vector<Feature*> feats_lost, feats_marg, feats_slam;

feats_lost = trackFEATS->get_feature_database()->features_not_containing_newer(state->_timestamp);

// Don't need to get the oldest features untill we reach our max number of clones

if((int)state->_clones_IMU.size() > state->_options.max_clone_size) {

feats_marg = trackFEATS->get_feature_database()->features_containing(state->margtimestep());

if(trackARUCO != nullptr && timestamp-startup_time >= params.dt_slam_delay) {

feats_slam = trackARUCO->get_feature_database()->features_containing(state->margtimestep());

}

}

// We also need to make sure that the max tracks does not contain any lost features

// This could happen if the feature was lost in the last frame, but has a measurement at the marg timestep

auto it1 = feats_lost.begin();

while(it1 != feats_lost.end()) {

if(std::find(feats_marg.begin(),feats_marg.end(),(*it1)) != feats_marg.end()) {

//printf(YELLOW "FOUND FEATURE THAT WAS IN BOTH feats_lost and feats_marg!!!!!!\n" RESET);

it1 = feats_lost.erase(it1);

} else {

it1++;

}

}

// Find tracks that have reached max length, these can be made into SLAM features

std::vector<Feature*> feats_maxtracks;

auto it2 = feats_marg.begin();

while(it2 != feats_marg.end()) {

// See if any of our camera's reached max track

bool reached_max = false;

for (const auto &cams: (*it2)->timestamps) {

if ((int)cams.second.size() > state->_options.max_clone_size) {

reached_max = true;

break;

}

}

// If max track, then add it to our possible slam feature list

if(reached_max) {

feats_maxtracks.push_back(*it2);

it2 = feats_marg.erase(it2);

} else {

it2++;

}

}

// Count how many aruco tags we have in our state

int curr_aruco_tags = 0;

auto it0 = state->_features_SLAM.begin();

while(it0 != state->_features_SLAM.end()) {

if ((int) (*it0).second->_featid <= state->_options.max_aruco_features) curr_aruco_tags++;

it0++;

}

// Append a new SLAM feature if we have the room to do so

// Also check that we have waited our delay amount (normally prevents bad first set of slam points)

if(state->_options.max_slam_features > 0 && timestamp-startup_time >= params.dt_slam_delay && (int)state->_features_SLAM.size() < state->_options.max_slam_features+curr_aruco_tags) {

// Get the total amount to add, then the max amount that we can add given our marginalize feature array

int amount_to_add = (state->_options.max_slam_features+curr_aruco_tags)-(int)state->_features_SLAM.size();

int valid_amount = (amount_to_add > (int)feats_maxtracks.size())? (int)feats_maxtracks.size() : amount_to_add;

// If we have at least 1 that we can add, lets add it!

// Note: we remove them from the feat_marg array since we don't want to reuse information...

if(valid_amount > 0) {

feats_slam.insert(feats_slam.end(), feats_maxtracks.end()-valid_amount, feats_maxtracks.end());

feats_maxtracks.erase(feats_maxtracks.end()-valid_amount, feats_maxtracks.end());

}

}

// Loop through current SLAM features, we have tracks of them, grab them for this update!

// Note: if we have a slam feature that has lost tracking, then we should marginalize it out

// Note: if you do not use FEJ, these types of slam features *degrade* the estimator performance....

for (std::pair<const size_t, Landmark*> &landmark : state->_features_SLAM) {

if(trackARUCO != nullptr) {

Feature* feat1 = trackARUCO->get_feature_database()->get_feature(landmark.second->_featid);

if(feat1 != nullptr) feats_slam.push_back(feat1);

}

Feature* feat2 = trackFEATS->get_feature_database()->get_feature(landmark.second->_featid);

if(feat2 != nullptr) feats_slam.push_back(feat2);

if(feat2 == nullptr) landmark.second->should_marg = true;

}

// Lets marginalize out all old SLAM features here

// These are ones that where not successfully tracked into the current frame

// We do *NOT* marginalize out our aruco tags

StateHelper::marginalize_slam(state);

// Separate our SLAM features into new ones, and old ones

std::vector<Feature*> feats_slam_DELAYED, feats_slam_UPDATE;

for(size_t i=0; i<feats_slam.size(); i++) {

if(state->_features_SLAM.find(feats_slam.at(i)->featid) != state->_features_SLAM.end()) {

feats_slam_UPDATE.push_back(feats_slam.at(i));

//printf("[UPDATE-SLAM]: found old feature %d (%d measurements)\n",(int)feats_slam.at(i)->featid,(int)feats_slam.at(i)->timestamps_left.size());

} else {

feats_slam_DELAYED.push_back(feats_slam.at(i));

//printf("[UPDATE-SLAM]: new feature ready %d (%d measurements)\n",(int)feats_slam.at(i)->featid,(int)feats_slam.at(i)->timestamps_left.size());

}

}

// Concatenate our MSCKF feature arrays (i.e., ones not being used for slam updates)

std::vector<Feature*> featsup_MSCKF = feats_lost;

featsup_MSCKF.insert(featsup_MSCKF.end(), feats_marg.begin(), feats_marg.end());

featsup_MSCKF.insert(featsup_MSCKF.end(), feats_maxtracks.begin(), feats_maxtracks.end());

//===================================================================================

// Now that we have a list of features, lets do the EKF update for MSCKF and SLAM!

//===================================================================================

// Pass them to our MSCKF updater

// NOTE: if we have more then the max, we select the "best" ones (i.e. max tracks) for this update

// NOTE: this should only really be used if you want to track a lot of features, or have limited computational resources

if((int)featsup_MSCKF.size() > state->_options.max_msckf_in_update)

featsup_MSCKF.erase(featsup_MSCKF.begin(), featsup_MSCKF.end()-state->_options.max_msckf_in_update);

updaterMSCKF->update(state, featsup_MSCKF);

rT4 = boost::posix_time::microsec_clock::local_time();

// Perform SLAM delay init and update

// NOTE: that we provide the option here to do a *sequential* update

// NOTE: this will be a lot faster but won't be as accurate.

std::vector<Feature*> feats_slam_UPDATE_TEMP;

while(!feats_slam_UPDATE.empty()) {

// Get sub vector of the features we will update with

std::vector<Feature*> featsup_TEMP;

featsup_TEMP.insert(featsup_TEMP.begin(), feats_slam_UPDATE.begin(), feats_slam_UPDATE.begin()+std::min(state->_options.max_slam_in_update,(int)feats_slam_UPDATE.size()));

feats_slam_UPDATE.erase(feats_slam_UPDATE.begin(), feats_slam_UPDATE.begin()+std::min(state->_options.max_slam_in_update,(int)feats_slam_UPDATE.size()));

// Do the update

updaterSLAM->update(state, featsup_TEMP);

feats_slam_UPDATE_TEMP.insert(feats_slam_UPDATE_TEMP.end(), featsup_TEMP.begin(), featsup_TEMP.end());

}

feats_slam_UPDATE = feats_slam_UPDATE_TEMP;

rT5 = boost::posix_time::microsec_clock::local_time();

updaterSLAM->delayed_init(state, feats_slam_DELAYED);

rT6 = boost::posix_time::microsec_clock::local_time();

//===================================================================================

// Update our visualization feature set, and clean up the old features

//===================================================================================

// Save all the MSCKF features used in the update

good_features_MSCKF.clear();

for(Feature* feat : featsup_MSCKF) {

good_features_MSCKF.push_back(feat->p_FinG);

feat->to_delete = true;

}

// Remove features that where used for the update from our extractors at the last timestep

// This allows for measurements to be used in the future if they failed to be used this time

// Note we need to do this before we feed a new image, as we want all new measurements to NOT be deleted

trackFEATS->get_feature_database()->cleanup();

if(trackARUCO != nullptr) {

trackARUCO->get_feature_database()->cleanup();

}

//===================================================================================

// Cleanup, marginalize out what we don't need any more...

//===================================================================================

// First do anchor change if we are about to lose an anchor pose

updaterSLAM->change_anchors(state);

// Marginalize the oldest clone of the state if we are at max length

if((int)state->_clones_IMU.size() > state->_options.max_clone_size) {

// Cleanup any features older then the marginalization time

trackFEATS->get_feature_database()->cleanup_measurements(state->margtimestep());

if(trackARUCO != nullptr) {

trackARUCO->get_feature_database()->cleanup_measurements(state->margtimestep());

}

// Finally marginalize that clone

StateHelper::marginalize_old_clone(state);

}

// Finally if we are optimizing our intrinsics, update our trackers

if(state->_options.do_calib_camera_intrinsics) {

// Get vectors arrays

std::map<size_t, Eigen::VectorXd> cameranew_calib;

std::map<size_t, bool> cameranew_fisheye;

for(int i=0; i<state->_options.num_cameras; i++) {

Vec* calib = state->_cam_intrinsics.at(i);

bool isfish = state->_cam_intrinsics_model.at(i);

cameranew_calib.insert({i,calib->value()});

cameranew_fisheye.insert({i,isfish});

}

// Update the trackers and their databases

trackFEATS->set_calibration(cameranew_calib, cameranew_fisheye, true);

if(trackARUCO != nullptr) {

trackARUCO->set_calibration(cameranew_calib, cameranew_fisheye, true);

}

}

rT7 = boost::posix_time::microsec_clock::local_time();

//===================================================================================

// Debug info, and stats tracking

//===================================================================================

// Get timing statitics information

double time_track = (rT2-rT1).total_microseconds() * 1e-6;

double time_prop = (rT3-rT2).total_microseconds() * 1e-6;

double time_msckf = (rT4-rT3).total_microseconds() * 1e-6;

double time_slam_update = (rT5-rT4).total_microseconds() * 1e-6;

double time_slam_delay = (rT6-rT5).total_microseconds() * 1e-6;

double time_marg = (rT7-rT6).total_microseconds() * 1e-6;

double time_total = (rT7-rT1).total_microseconds() * 1e-6;

// Timing information

printf(BLUE "[TIME]: %.4f seconds for tracking\n" RESET, time_track);

printf(BLUE "[TIME]: %.4f seconds for propagation\n" RESET, time_prop);

printf(BLUE "[TIME]: %.4f seconds for MSCKF update (%d features)\n" RESET, time_msckf, (int)featsup_MSCKF.size());

if(state->_options.max_slam_features > 0) {

printf(BLUE "[TIME]: %.4f seconds for SLAM update (%d feats)\n" RESET, time_slam_update, (int)feats_slam_UPDATE.size());

printf(BLUE "[TIME]: %.4f seconds for SLAM delayed init (%d feats)\n" RESET, time_slam_delay, (int)feats_slam_DELAYED.size());

}

printf(BLUE "[TIME]: %.4f seconds for marginalization (%d clones in state)\n" RESET, time_marg, (int)state->_clones_IMU.size());

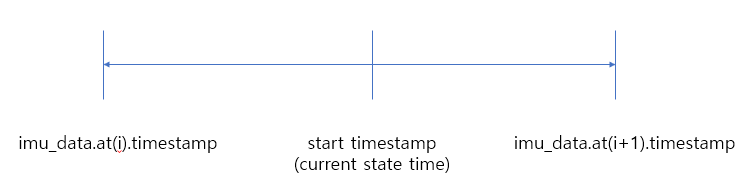

printf(BLUE "[TIME]: %.4f seconds for total\n" RESET, time_total);